I am a Tenure-Track Assistant Professor in the CSE department at University of Louisville (UofL), and am also affiliated with the Louisville Automation & Robotics Research Institute (LARRI). I received my PhD in Information Sciences and Technology from the Pennsylvania State University, and my Bachelor’s and Master’s degrees from Tsinghua University. During my PhD, I also worked as a research intern at Snap Research, Kitware, and Toyota Research Institute.

I lead the Intelligent Vision and Interaction (InVision) Lab. Our research vision is to advance artificial intelligence to better understand our world and augment human capabilities. To achieve this, our work bridges foundational research in visual representation and synthesis with human-centered applications, focusing on creating trustworthy AI for society and pioneering new tools for medicine and healthcare.

- Visual Representation and Synthesis: We build computational models that learn rich representations of the visual world. Our lab develops novel methods for machines to perceive their environment and synthesize new visual content, from reconstructing scenes to generating novel imagery. By creating powerful and flexible visual representations, we are laying the essential groundwork for more capable robotics and interactive AI.

- Human-Centered and Trustworthy AI: We design and evaluate intelligent systems that augment human capabilities and foster equitable human-AI collaboration. This research spans from creating novel assistive technologies for people with disabilities to developing fundamentally fair and privacy-preserving machine learning models for society.

- AI in Medicine and Healthcare: We develop robust and reliable AI to tackle high-stakes challenges in healthcare. Our goal is to enhance the quality of medical imaging and create specialized machine learning systems for tasks like segmentation and anomaly detection, providing powerful tools to support clinical decision-making.

📣 Call for Papers

I am serving as a guest editor for the special issue “Human-Centered Artificial Intelligence” in the journal Future Internet (ISSN 1999-5903). Submission Deadline: February 28, 2026

📰 Recent News

- 01/2026: Paper on responsible AI for children accepted to CHI 2026

- 12/2025: Awarded Jon Rieger Seed Grant as sole PI

- 10/2025: Recognized as a Top Reviewer for NeurIPS 2025

- 09/2025: Awarded 2025 NVIDIA Academic Grant as sole PI. Grateful to NVIDIA for the support!

- 07/2025: Honored with the Best Student Paper Award at ECML-PKDD 2025 🏆

- 06/2025: Two papers accepted to ICCV 2025: Top2Pano and DAC

- 06/2025: Appointed Area Chair for WACV 2026

- 05/2025: One paper accepted to MICCAI 2025

- 05/2025: One paper accepted to ECML-PKDD 2025

- 03/2025: Review paper on layout generation accepted to Computational Visual Media (CVMJ); to be presented at CVM 2025

- 02/2025: Two papers accepted to CVPR 2025: ZeroPlane and PlanarSplatting See you in Nashville!

- 01/2025: Paper on interactions of visually impaired users with LMMs accepted to CHI 2025

- 11/2024: Awarded NSF Campus Cyberinfrastructure (CC*) grant as co-PI. Grateful for the support!

- 05/2024: Three papers accepted to MICCAI 2024

- 01/2024: Paper on privacy-preserving remote assistance accepted to CHI 2024

- 01/2024: One paper accepted to ICRA 2024

📚 Selected Recent Publications [Full List]

🤖 Visual Representation and Synthesis

| Top2Pano: Learning to Generate Indoor Panoramas from Top-Down View |

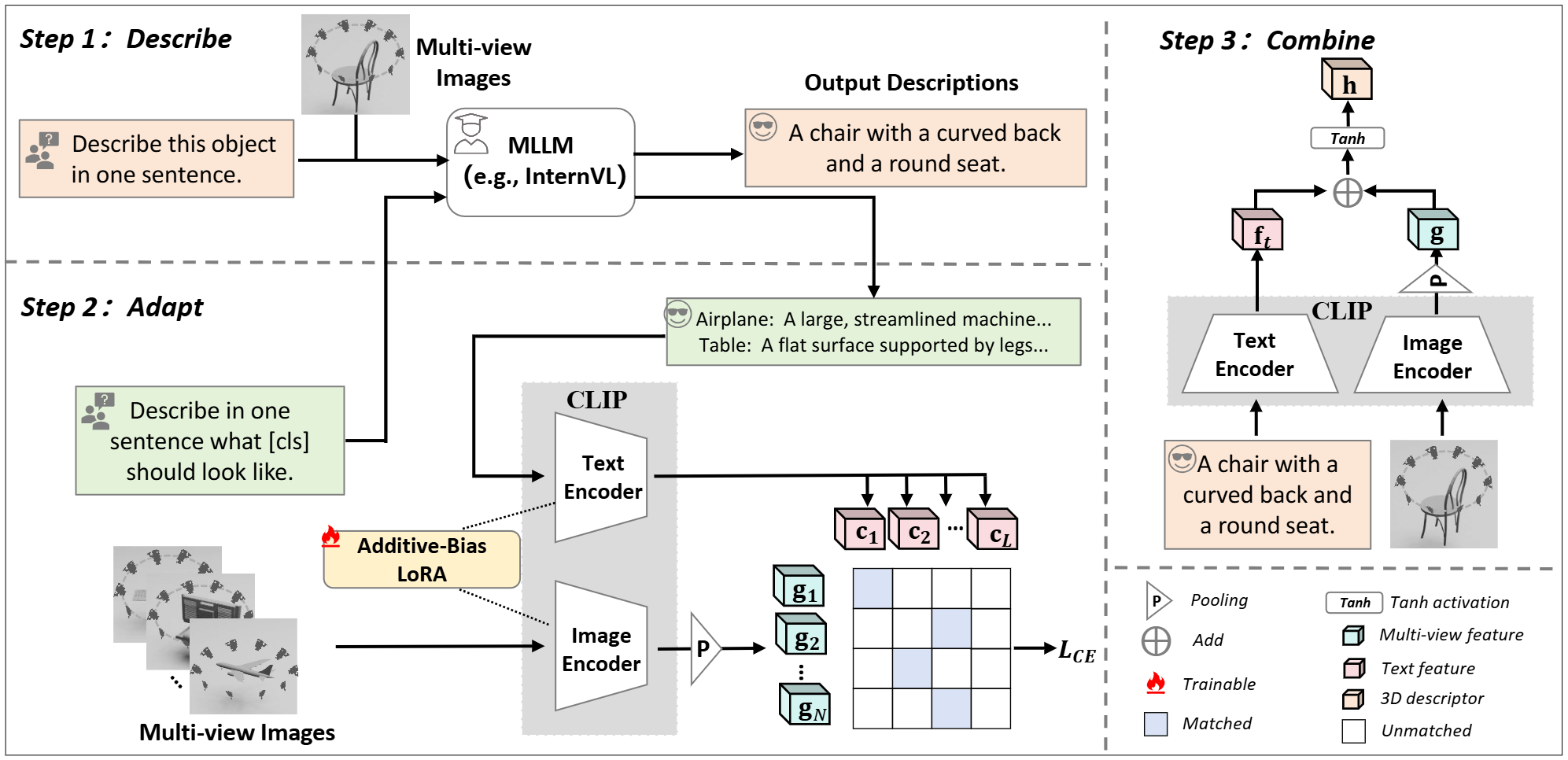

| Describe, Adapt and Combine: Empowering CLIP Encoders for Open-set 3D Object Retrieval |

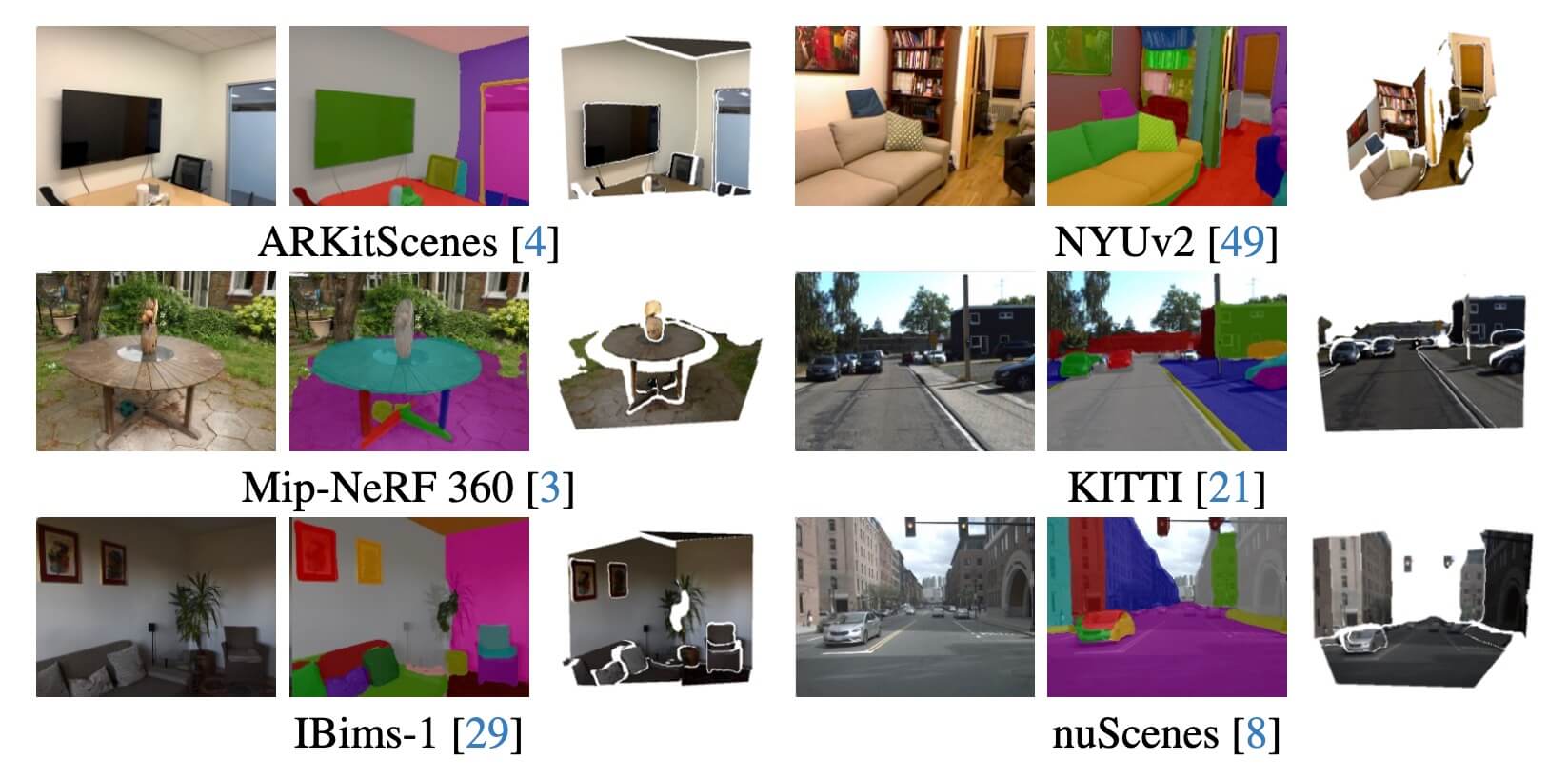

| Towards In-the-wild 3D Plane Reconstruction from a Single Image |

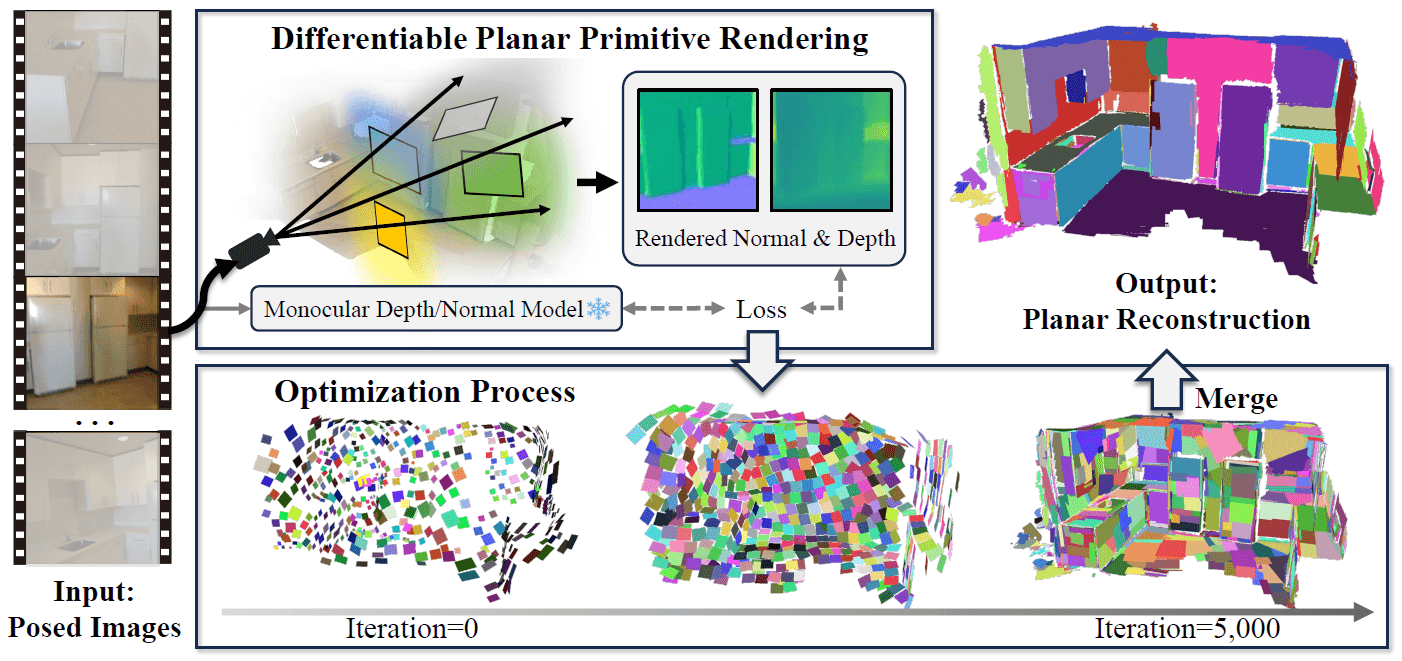

| PlanarSplatting: Accurate Planar Surface Reconstruction in 3 Minutes |

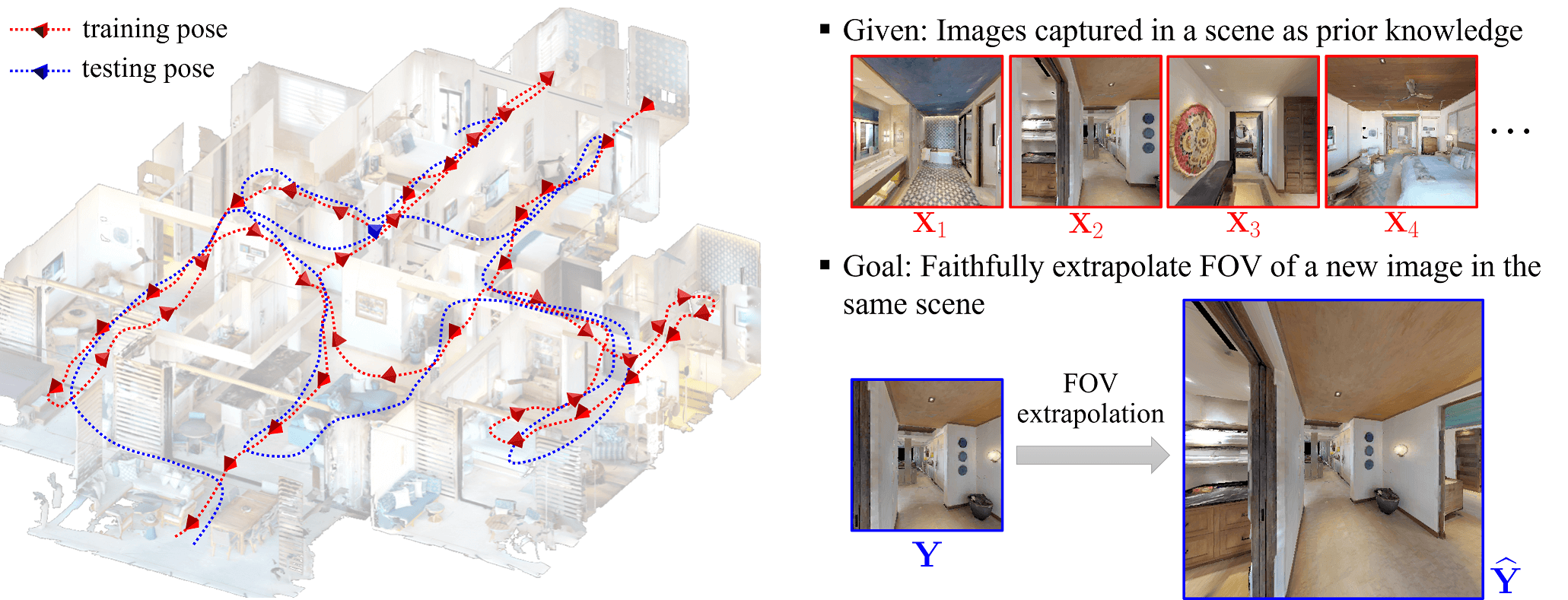

| NeRF-Enhanced Outpainting for Faithful Field-of-View Extrapolation |

.png) | Be Real in Scale: Swing for True Scale in Dual Camera Mode |

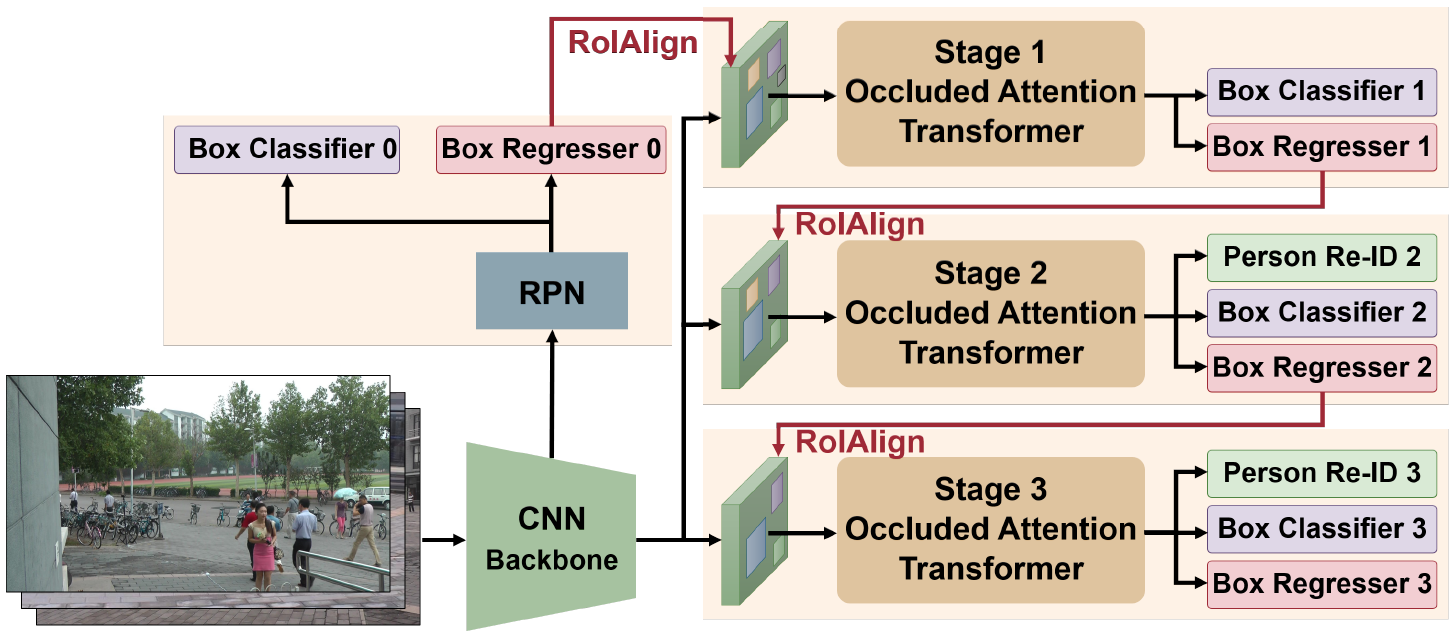

| Cascade Transformers for End-to-End Person Search |

🤝 Human-Centered and Trustworthy AI

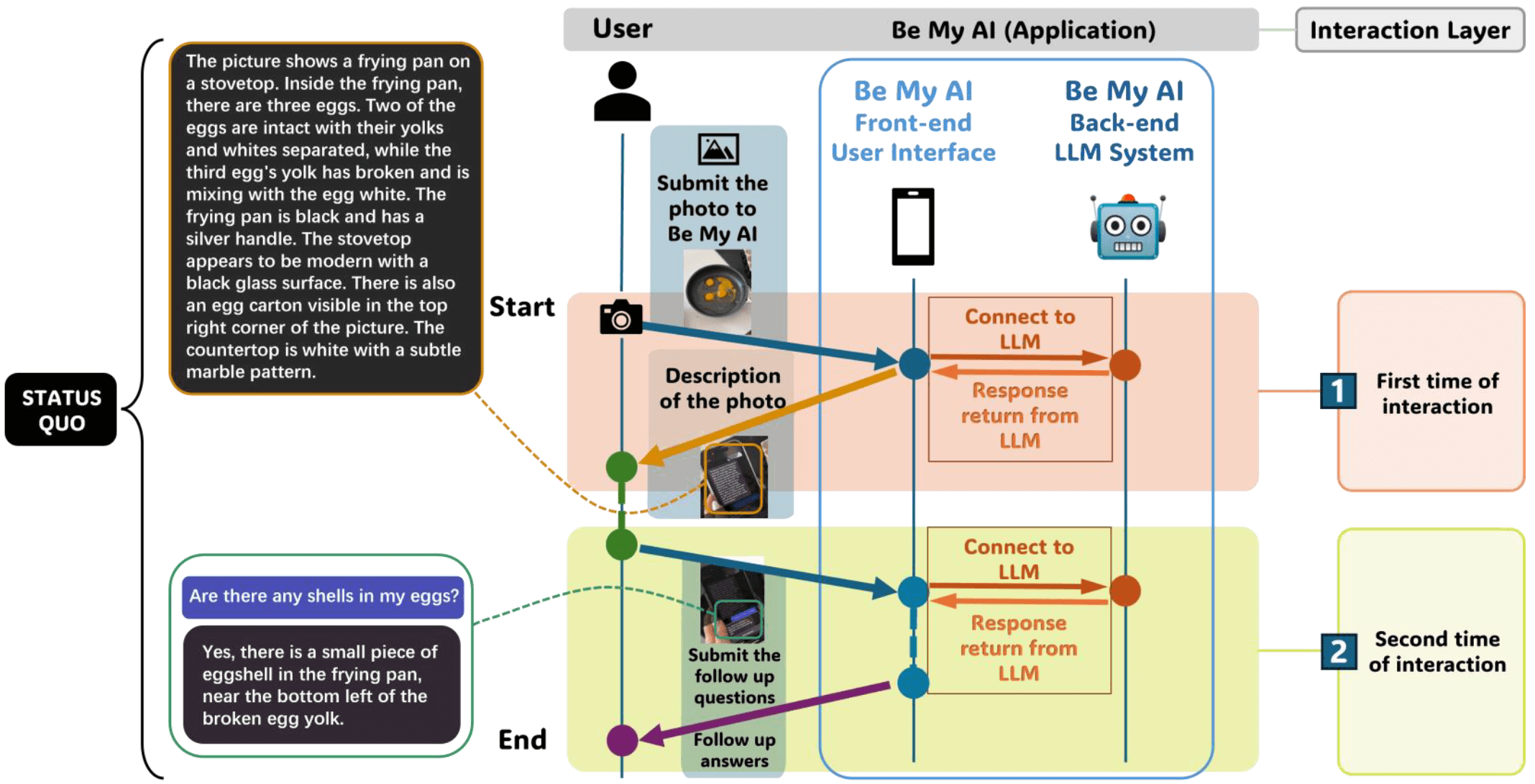

| Beyond Visual Perception: Insights from Smartphone Interaction of Visually Impaired Users with Large Multimodal Models |

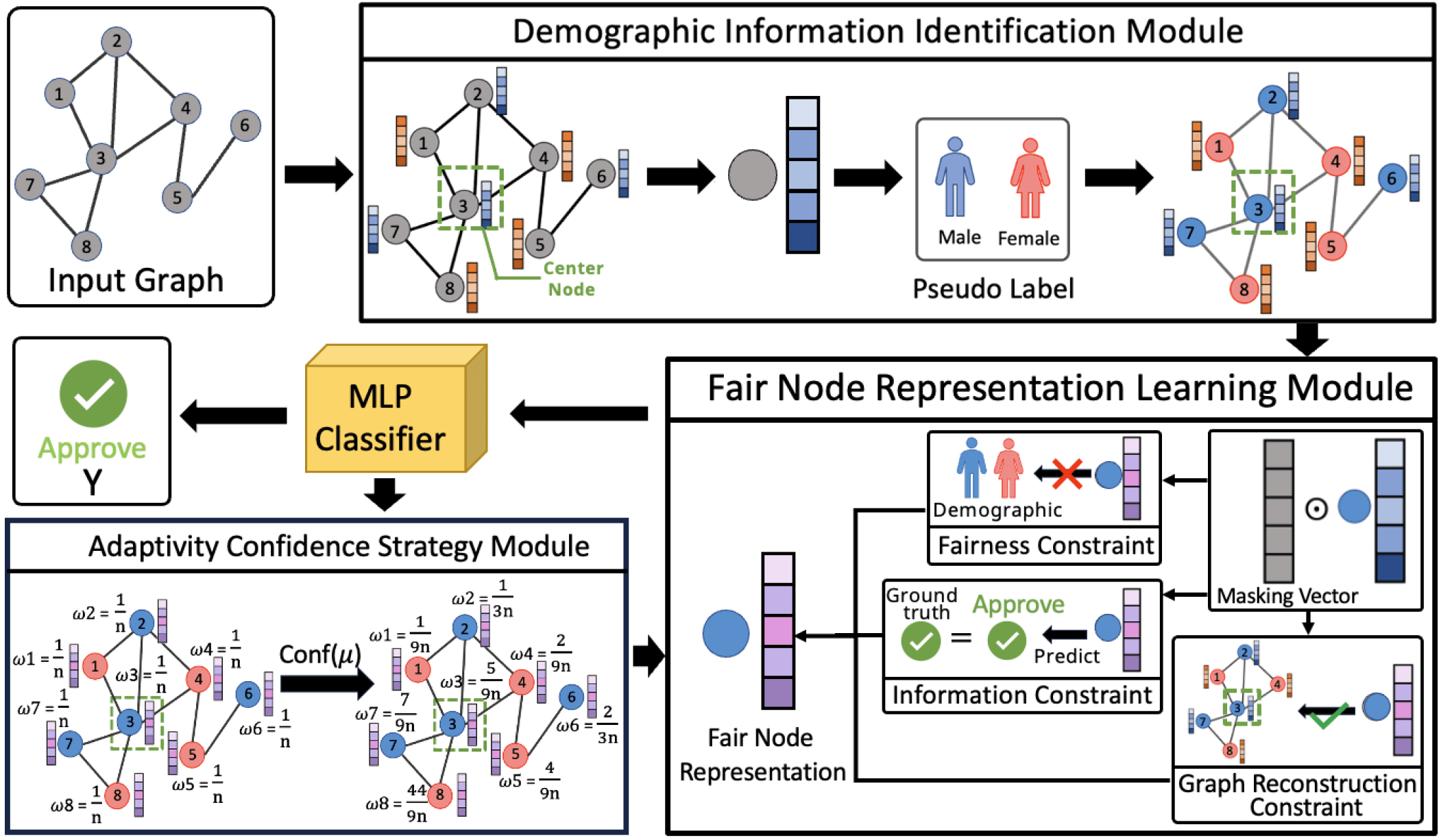

| Fairness-Aware Graph Representation Learning with Limited Demographic Information |

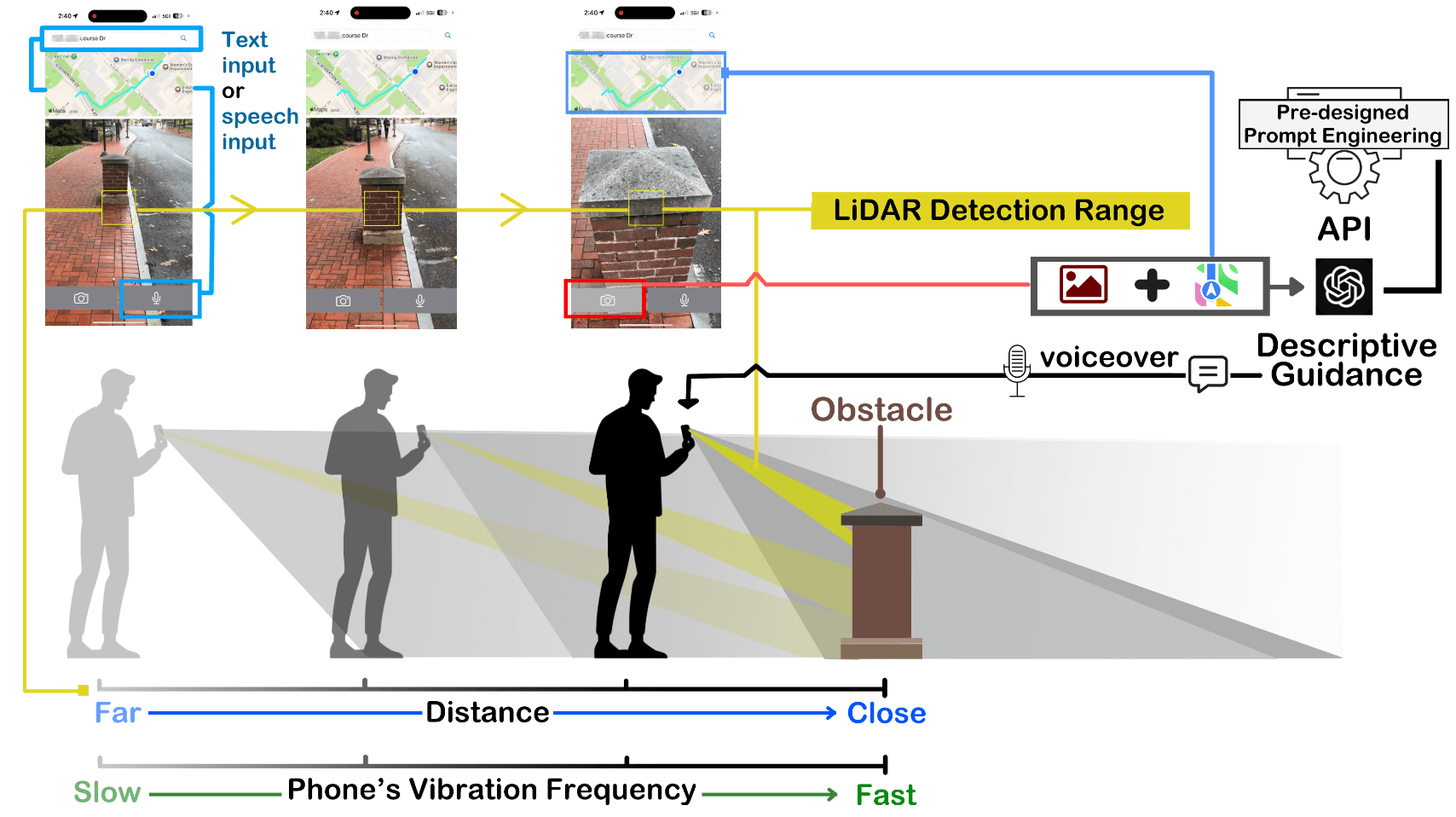

| Enhancing the Travel Experience for People with Visual Impairments through Multimodal Interaction: NaviGPT, A Real-Time AI-Driven Mobile Navigation System |

| BubbleCam: Engaging Privacy in Remote Sighted Assistance |

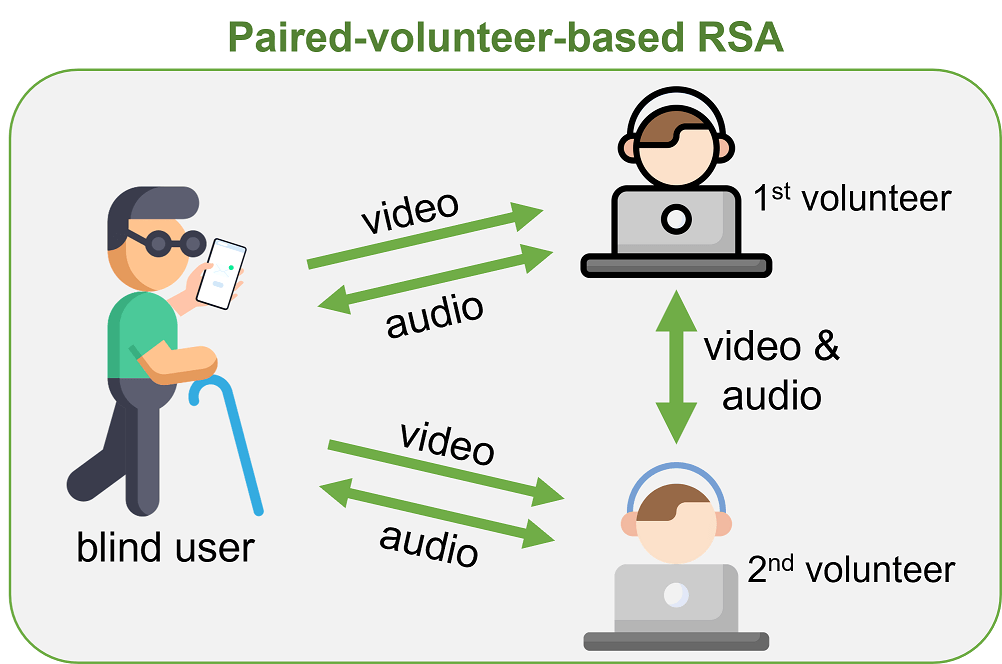

| Are Two Heads Better than One? Investigating Remote Sighted Assistance with Paired Volunteers |

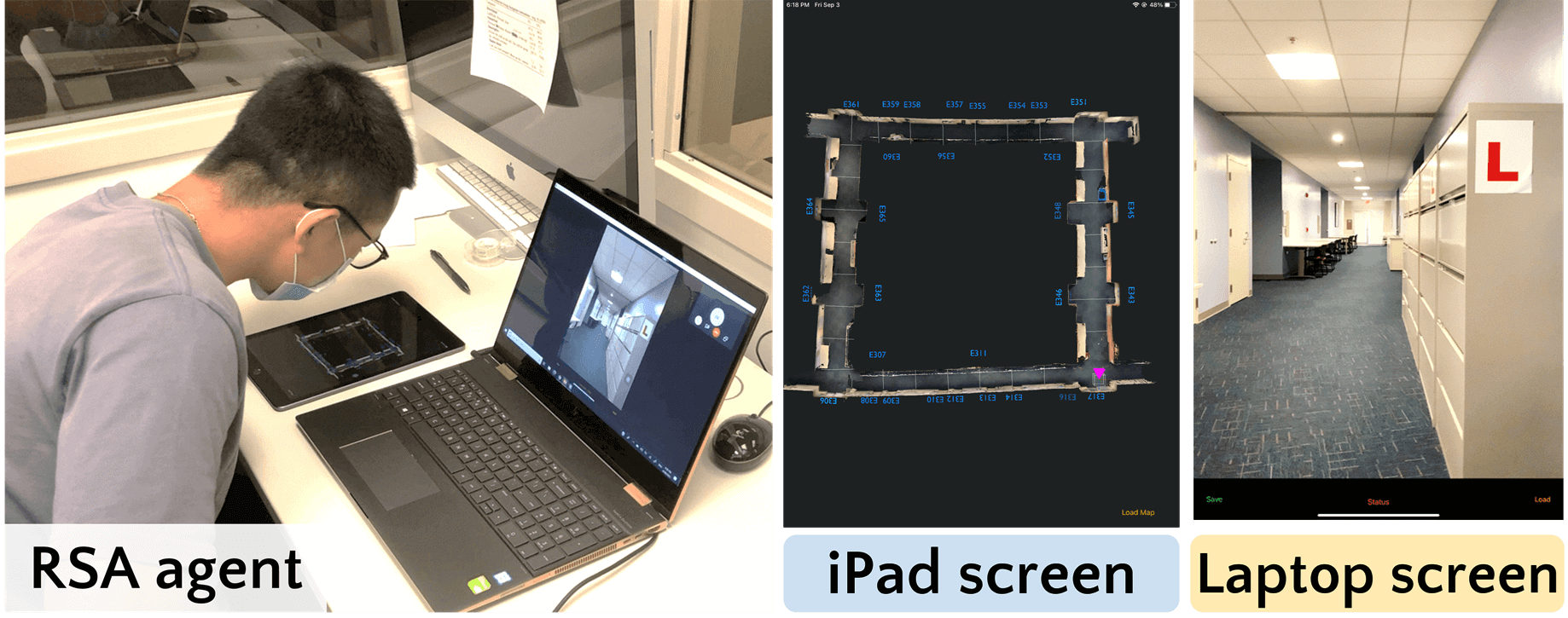

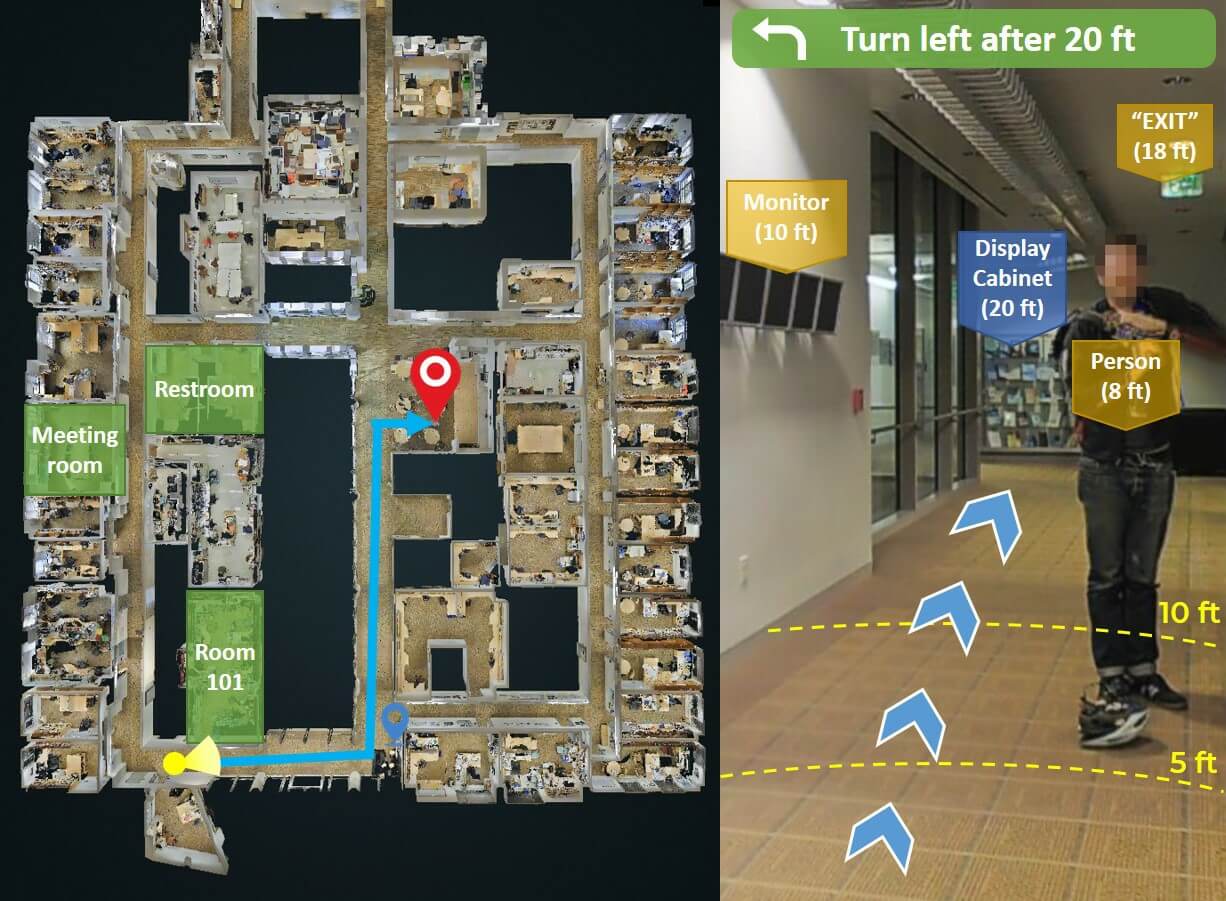

| Helping Helpers: Supporting Volunteers in Remote Sighted Assistance with Augmented Reality Maps |

| Opportunities for Human-AI Collaboration in Remote Sighted Assistance |

🩺 AI in Medicine and Healthcare

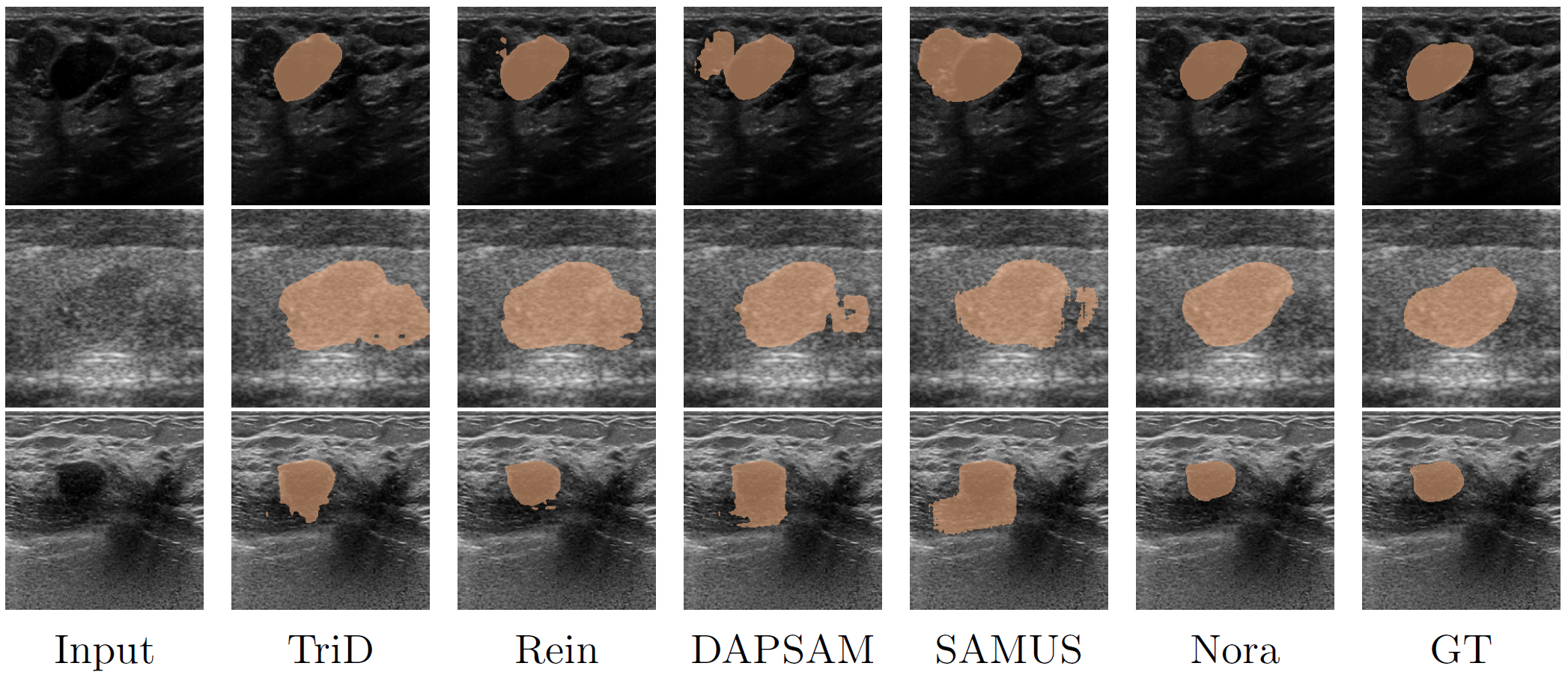

| Noise-Robust Tuning of SAM for Domain Generalized Ultrasound Image Segmentation |

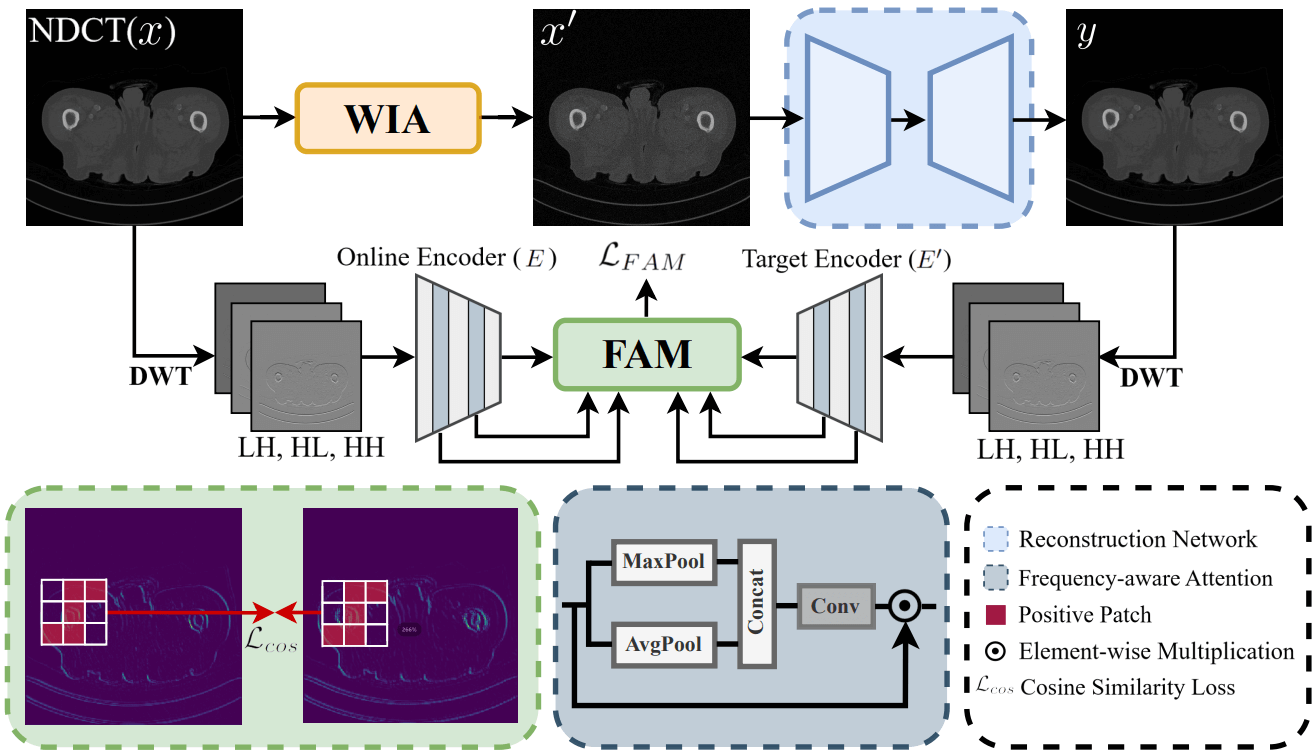

| WIA-LD2ND: Wavelet-based Image Alignment for Self-supervised Low-Dose CT Denoising |

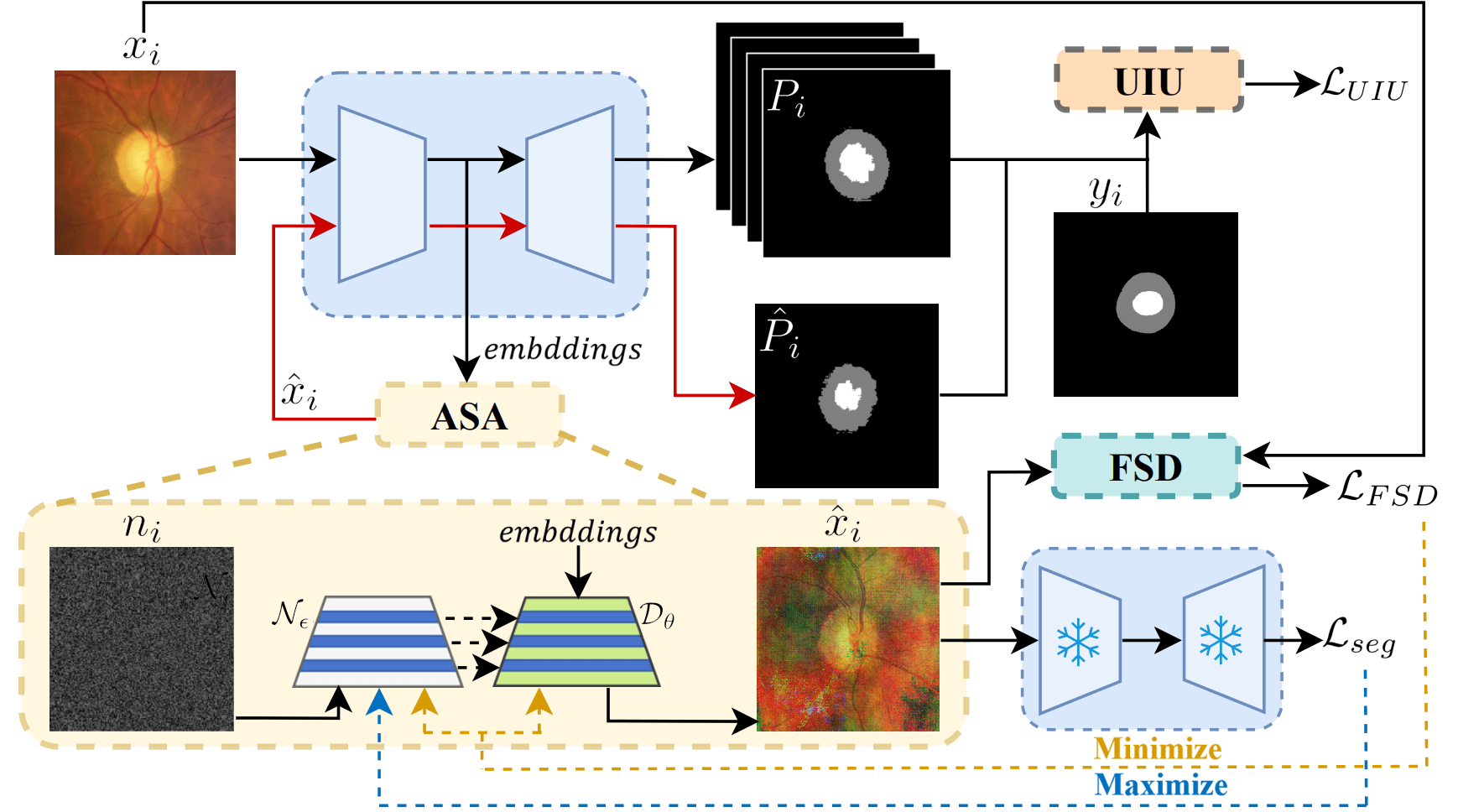

| MoreStyle: Relax Low-frequency Constraint of Fourier-based Image Reconstruction in Generalizable Medical Image Segmentation |

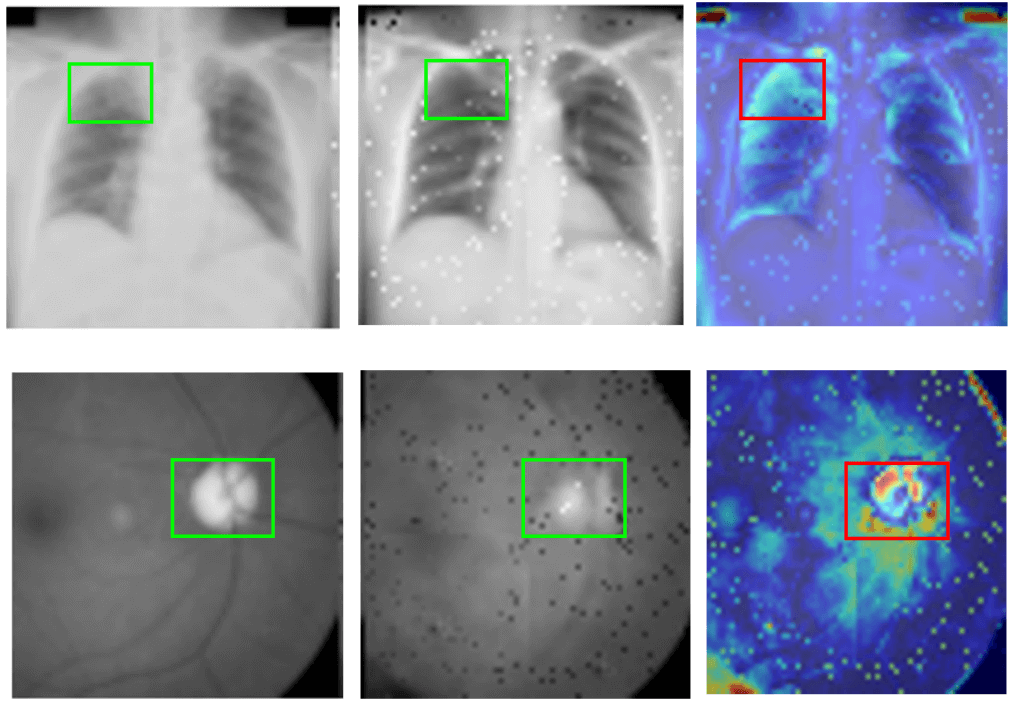

| Spatial-aware Attention Generative Adversarial Network for Semi-supervised Anomaly Detection in Medical Image |